Professor Paul Arbon explains how his team developed a community-friendly toolkit that can be used by a community to understand their likely level of resilience in the face of disaster.

The concept of ‘community resilience’ is widely used by community leaders, policy makers, emergency management practitioners and academics in Australia, but there is little agreement on its meaning and application. Despite its popularity, there are widely differing views on the meaning and utility of the resilient community concept. This lack of consensus undermines its usefulness when developing emergency and disaster management policies and plans at national, state, territory and local levels.

This paper discusses the development of a practical toolkit that can be used by communities to understand the likely level of resilience in the face of disaster. The toolkit takes an all-hazards approach and helps local policy makers to set priorities, allocate funds, and develop emergency and disaster management programs that build local community resilience.

The toolkit is the result of a project funded by the National Emergency Management Program (NEMP) that supports the 2009 Council of Australian Governments National Disaster Resilience Statement and the National Strategy for Disaster Resilience. The project was completed in several stages with the assistance of a National Advisory Committee and a project working group. A review of literature was used to develop a definition and model of community disaster resilience and a scorecard was designed to assess levels of existing community disaster resilience. Guidelines were constructed for its use. The definition, model and scorecard were reviewed and refined with the help of two communities before a final version was trialled in four communities across Australia (Northern Territory, Queensland, South Australia and Western Australia). The feedback from those communities was used to finalise the scorecard and guidelines. The final version of the toolkit is available for use by communities interested in measuring their disaster resilience and supports them in plans to strengthen resilience in the future.

In 2009 the Council of Australian Governments (COAG) agreed to adopt a whole-of-nation resilience-based approach to disaster management, which recognises that a national, co-ordinated and co-operative effort is required to enhance Australia’s capacity to withstand and recover from emergencies and disasters. The National Strategy for Disaster Resilience (2011) sets out how the nation should achieve the COAG vision, emphasising that disaster resilience is not solely the domain of emergency services but requires society as a whole to be involved. In response, the Torrens Resilience Institute, a collaborative effort of the University of Adelaide, Cranfield University (UK), Flinders University, and the University of South Australia, developed a community disaster resilience model and assessment tool.

Generally, Australians have become more aware of the potential for a range of disastrous events to occur. There is a growing awareness that disaster readiness involves more than an efficient emergency service and rapid response capability during the acute phase of a catastrophic event. The process of recovery following an emergency takes time, and for some communities and families, much more time than others. In the world of individual psychology, the term ‘resilience’ is used to describe the trait that allows a person to move through a challenge, adapt if necessary and return to a (relatively) healthy state. The term is now being applied to whole communities. Community resilience is a process of continuous engagement that builds preparedness prior to a disaster and allows for a healthy recovery afterwards. Academic research is beginning to understand the complexities of this process, often using long-term studies and complex measurements (Flanagan 2011, Longstaff 2010, Maguire et al. 2008, Zobel 2011). This project used the available research-based knowledge about resilience to create a model of community disaster resilience then translated that into a user-friendly tool that allows people to assess the current level of likely disaster resilience and create action plans to strengthen resilience in their community.

The scientific and grey literature reveals a wealth of information, definitions, frameworks and models of community resilience. Many articles provide tools that can be used by communities to build their overall resilience to issues that may affect their health and wellbeing (Cox et al. 2011, Emergency Volunteering 2011, Longstaff 2010, Mayunga 2007). Those articles that specifically consider community disaster resilience have a focus on individuals, community vulnerability and risk assessments (Fekete 2011, Fekete et al. 2009, Flanagan et al. 2012, Frommer et al. 2011, Insurance Council of Australia 2008, James Cook University 2010). Despite the range and depth of material, no standard definition of community disaster resilience was found, nor was there a published, validated tool that communities could easily use to assess their ability to prepare for an emergency event at the community level rather than the individual level.

For the purpose of this project a community was defined as a group of people living together within a defined geographical and geopolitical area such as a town, district or council. The community disaster resilience toolkit is designed so that community members can collectively accept their roles to:

The project team worked on the tool in conjunction with a Project Advisory Committee and a project working group. The National Advisory Committee met quarterly, to oversee the general direction of the project, while the Working Group met in person or reviewed draft documents at varying intervals depending on the work being done.

Project Advisory Group – a national group with a broad perspective drawn from federal and state government. Members were:

Working Group – members drawn from the universities that comprise the Torrens Resilience Institute as well as other government and emergency sector representatives. They were chosen from different specialties to contribute their varied expertise, to assist with the development of the definition of community disaster resilience and the key elements of a model and criteria for the Scorecard. Members were:

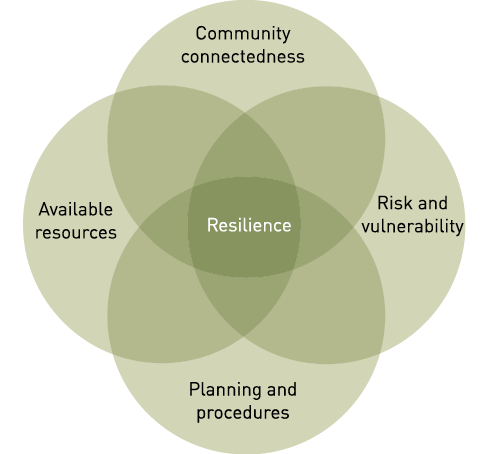

Based on the literature review the project working group identified reoccurring themes and concepts that informed the model of community disaster resilience (see Figure 1). The model is consistent with available research and identifies the overlapping relationships of community connectedness, risk/vulnerability, planning/procedures and available resources as comprising a community’s disaster resilience.

Figure 1: Community Disaster Resilience Model.

Source: www.torrensresilience.org

Using this model, questions that could illuminate each of the four components were drafted from the perspective of an informed community member rather than a research scholar. The response to each question would be a ranking on a five-point Likert scale, with the responses ranging from extremely low to very high. As with the questions themselves, this approach was deemed by the working group as the one most likely to work for informed community members using the toolkit. The initial draft of nearly 100 questions was reduced to 22 by the working group.

The scoring levels for each question were based on research where available or the best judgment of the working group based on research or knowledge and experience of communities and disasters. Where possible information such as the Census or locally-developed planning documents were used. Examples of the scoring options are presented in Table 1. If there was disagreement among committee members on a score, a lower rather than higher score was allocated as the disagreement itself is indicative that there is work to be done, and community engagement in follow-up activities is one goal of the process. Summary scoring consists of summing up the total points for questions in each section and then the total scorecard. This sum identifies whether the community has achieved only 25 per cent of the possible points (red or ‘danger zone’), is in the middle 50 per cent of points (caution zone), or has ranked itself in the highest 25 per cent of points (green or ‘going well’).

Table 1: Example of the Scorecard.

|

Question |

Score |

Information resource |

||||

|---|---|---|---|---|---|---|

|

1.1 What proportion of your population is engaged with organisations (e.g., clubs, service groups, sports teams, churches, library)? |

1 <20% |

2 21–40% |

3 41–60% |

4 61–80% |

5 81–100% |

Census |

|

1.2 Do members of the community have access to a range of communication systems that allow information to flow during an emergency? |

1 Don’t know |

2 Has limited access to a range of communication |

3 Has good access to a range of communication but damage resistance not known |

4 Has very good access to a range of communication and damage resistance is moderate |

5 Has wide range of access to damage-resistant communication |

Self-assessment |

|

1.3 What is the level of communication between local governing body and population? |

1 Passive (government participation only) |

2 Consultation |

3 Engagement |

4 Collaboration |

5 Active participation (community informs government on what is needed) |

International Association for Public Participation (IAP2) Spectrum http://c.ymcdn.com/sites/www.iap2.org/resource/resmgr/imported/IAP2%20Spectrum_vertical.pdf |

|

1.4 What is the relationship of your community with the larger region? |

1 No networks with other towns/region |

2 Informal networks with other towns/region |

3 Some representation at regional meetings |

4 Multiple representation at regional meetings |

5 Regular planning and activities with other towns/region |

Self-assessment |

|

1.5 What is the degree of connectedness across community groups? (e.g. ethnicities/sub-cultures/age groups/new residents not in your community when last disaster happened) |

1 Little/no attention to subgroups in community |

2 Advertising of cultural/cross-cultural events |

3 Comprehensive inventory of cultural identity groups |

4 Community cross-cultural council with wide membership |

5 Support for and active involvement in cultural/cross-cultural events (in addition to previous) |

Self-assessment tied to demographic profile; local survey to assess |

|

Connectedness score |

25% (5–10) |

26–75% (11–29) |

76–100% (20–25) |

|||

Source: www.torrensresilience.org

What proportion of your population is engaged with organisations (e.g. clubs, service groups, sports teams, churches, library)?

Do members of the community have access to a range of communication systems that allow information to flow during an emergency?

What is the level of communication between local governing body and population?

What is the relationship of your community with the larger region? What is the degree of connectedness across community groups? (e.g. ethnicities/sub-cultures/age groups/ new residents not in your community when last disaster happened)

What are the known risks of all identified hazards in your community? What are the trends in relative size of the permanent resident population and the daily population?

What is the rate of the resident population change in the last five years?

What proportion of the population has the capacity to independently move to safety? (e.g. non-institutionalised, mobile with own vehicle, adult)

What proportion of the resident population prefers communication in a language other than English?

Has the transient population (e.g. tourists, transient workers) been included in planning for response and recovery?

What is the risk that your community could be isolated during an emergency event?

To what extent and level are households within the community engaged in planning for disaster response and recovery?

Are there planned activities to reach the entire community about all-hazards resilience?

Does the community actually meet requirements for disaster readiness? Do post-disaster event assessments change expectations or plans?

How comprehensive is the local infrastructure emergency protection plan? (e.g. water supply, sewerage, power system)

What proportion of population with skills useful in emergency response/ recovery (e.g. first aid, safe food handling) can be mobilised if needed?

To what extent are all educational institutions (public/private schools, all levels including early child care) engaged in emergency preparedness education?

How are available medical and public health services included in emergency planning?

Are readily accessible locations available as evacuation or recovery centres (e.g. school halls, community or shopping centres, post office) and included in resilience strategy?

What is the level of food/water/fuel readily availability in the community?

The draft instrument was reviewed with members of two communities for clarity of language and the likelihood that a community committee could reach consensus on a score. The final test version of the scorecard, with instructions, was reviewed and approved by the Project Advisory Committee.

The Project Advisory Committee approved a set of pilot communities in different risk zones and of various sizes. No large urban areas were included due to concerns about meeting project deadlines. Possible test communities were contacted through the appropriate head of local government. Of these communities, six expressed interest and four were able to complete the Scorecard and provide feedback on the instructions, the process and the tool itself within the project’s timeframe. Each community identified a community committee of 10–15 members that would meet three times to complete the Scorecard and give feedback to the project team. Two members of the project team went to each test community for the first meeting of the community committee to provide an orientation and answer questions about the Scorecard. It was explained to the committee that they might meet within a two-week timeframe to complete a draft score and then two weeks later for a final scoring meeting and evaluation. Two members of the project team subsequently attended this final meeting in each community to gather observations and comments from the participants.

Assessment of feedback from the test sites on the model and the tool was based on responses to a series of questions asked of all focus group participants. Because the Scorecard was not a research instrument but a means of informing and engaging community members, participants were asked whether or not they thought the components in the Scorecard adequately assessed community disaster resilience as they understood it. An additional individual evaluation form and a self-addressed envelope were left for members to complete and return, however very few individual responses were received. As such, evaluation is based primarily on the community group discussions.

The support of local government personnel was consistently excellent in all communities participating as trial sites. The experience of the test communities highlighted the importance of the local government’s role in supporting this initiative by bringing the Community Scorecard Working Group together, providing the venue and, in particular, the personnel to co-ordinate the meetings and access information from the databases, which many of the community members were not familiar with.

The trial of the Scorecard was extremely valuable and the feedback allowed refinements to the instructions and the Scorecard. The conclusion voiced by communities and reached by the project team was that the user-friendly Scorecard is a workable tool for people to both assess their community disaster resilience and come together to plan what might further strengthen resilience.

The definition of community disaster resilience was thought to be understandable and the four components of disaster resilience, the questions and criteria, were considered appropriate measures of resilience. The suggested process of the three community meetings was regarded as sufficient and the Community Scorecard Working Group members reported enjoying the discussions that the scoring generated. They found them as valuable as the final score itself, affirming the positive process nature of community resilience building.

The actions taken in this process can feed into a cycle of quality improvement for local government and local emergency services. A critical point identified is that outcomes must be shared with the wider community in a way that engages their interest. Because this was an initial application of the toolkit, follow-up with communities over a period of a year or more would allow a more definitive assessment of whether or not the engagement was sustained and identified improvements made.

The final Scorecard with toolkit is available under the ‘Tools’ tab on the Torrens Resilience Institute website: www.torrensresilience.org and includes:

The remaining challenge is to encourage community participation in the scorecard process and to maintain the motivation of communities to accept collective responsibility to reduce the destructive impact of disruptive events, emergencies and disasters.

Cox RS & Perry K-ME 2011, Like a fish out of water: reconsidering disaster recovery and the role of place and social capital in community disaster resilience, American Journal Community Psychology vol. 48, pp. 395-411.

Emergency Volunteering 2011, Disaster Readiness Index. Volunteering Qld. & Emergency Management Queensland. Interactive Website. At: http://www.emergencyvolunteering.com.au/home/disaster-ready/menu/readiness-index, [April 2012].

Fekete A 2011, Common Criteria for the Assessment of Critical Infrastructures International Journal Disaster Risk Science vol 2, no.1, pp. 15-24.

Fekete A, Damm M & Birkmann J 2009, Scales as a Challenge for Vulnerability Assessment Natural Hazards, 55 (2010), Springer Science + Business Media, pp. 729-747.

Flanagan BE, Gregory EW, Hallisey EJ, Heitgerd JL & Lewis B 2011, A Social Vulnerability Index for Disaster Management. Journal of Homeland Security and Emergency Management: vol. 8, p 1, Article 3 Berkeley Electronic Press.

Frommer B 2011, Climate Change and the Resilient Society: Utopia or Realistic Option for German Regions? Natural Hazards, 58, Springer Science + Business Media, pp. 85-101.

Insurance Council of Australia 2008, Improving Community Resilience to Extreme Weather Events, Insurance Council of Australia, Sydney.

James Cook University 2010, Know your Patch to Grow your Patch. Understanding Communities Project Bushfire. CRC and Centre for Disaster Studies, James Cook University.

Maguire B & Cartwright S 2008, Assessing a Community’s Capacity to Manage Change: A Resilience Approach to Social Assessment Bureau of Rural Sciences, Commonwealth of Australia.

Longstaff PH, Armstrong NJ & Perrin K 2010, Building Resilient Communities: Tools for Assessment (White Paper). Syracuse, NY: Institute for National Security and Counterterrorism (INSCT).

Maguire B & Cartwright S 2008, Assessing a Community’s Capacity to Manage Change: A Resilience Approach to Social Assessment Bureau of Rural Sciences, Commonwealth of Australia.

Mayunga JS 2007, Understanding and Applying the Concept of Community Disaster Resilience: A Capital Based Approach Summer Academy for Social Vulnerability and Resilience Building, Munich.

Zobel CW 2011, Representing Perceived Tradeoffs in Defining Disaster Resilience Decision Support Systems, 50 Elsevier, pp. 394-403.

Professor Paul Arbon is the Director of Torrens Resilience Institute in Adelaide. His team members on this project were Professor Kristine Gebbie, Project Lead, Dr Lynette Cusack, Senior Lecturer, Dr Sugi Perera, Chief Project Officer, and Sarah Verdonk, Research Officer.

The Community Disaster Resilience Scorecard was completed with funding provided under the Australian Government National Emergency Management Program Grant scheme. The full project report, the toolkit and associated guidance are available under ‘Tools’ on the Institute website at www.torrensresilience.org