Within recent years organisations have increasingly focused on their ability to respond to crises; however they often struggle to prioritise and allocate resources to building resilience, given the difficulty of demonstrating progress or success. Dervitsiotis (2003) argues that conventional business excellence, such as that measured by the EFQM model or the Baldridge Awards is not as effective in crisis situations. These traditional models, which are used to measure success during business as usual, do not provide a measure of resilience during and after crises.

The majority of research into organisational resilience has been qualitative and descriptive: attempts to quantitatively measure resilience have been limited (Somers, 2007) (Webb, et al., 1999). This research attempts to fill that gap by developing a web-based survey tool to quantitatively measure resilience. Measurements of resilience will enable organisations to answer key questions including: how resilient are we, how does this differ from our expectations and those of our stakeholders, and what can we do to improve? Given this information organisations will be able to better allocate resources to resilience and to demonstrate progress over time.

Seville et al. (2008, p. 2) define organisational resilience as the ability of an organisation to “…survive, and potentially even thrive, in times of crisis”. Organisations often refer to the resiliency as redundancy of their physical resources such as plant and machinery, locations or buildings, and the lifelines infrastructure on which they rely. The resilience of physical resources is important and is often most visible during and after a natural disaster such as an earthquake or flood which interrupts the flow of these resources. However organisations also have to manage crises such as financial downturns, pandemics, large scale product faults, supply chain failures, industrial accidents and staffing issues. Resilience to these types of crises is often, (although not exclusively) less visible and is manifested through an organisation’s culture. Mitroff et al. (1989) argue that organisational culture is the most influential factor on crisis management and discuss whether or not some organisations exhibit characteristics that make them crisis-prone as opposed to crisis-prepared. Smith (1990) talks about how organisations often generate crises through three phases. One of the phases, the ‘crisis of management’ is characterised by a culture which lacks awareness and mindfulness (Weick & Sutcliffe, 2007) and so creates cascade failures and crises (Mitroff, et al., 1989). Hamel and Valikangas (2003, p. 2) discuss strategic resilience, arguing that it,

“…is about continuously anticipating and adjusting to deep, secular trends that can permanently impair the earning power of a core business. It’s about having the capacity to change before the case for change becomes desperately obvious”.

In order to measure resilience it is necessary to identify its constituent parts (Paton & Johnston, 2006). McManus et al. (2008) do this, providing a useful definition which is used as the basis for indicators adapted and developed through this research. They define organisational resilience as,

“…a function of an organisation’s overall situation awareness, keystone vulnerability and adaptive capacity in a complex, dynamic and interdependent system”. (McManus, et al., 2008, p. 82)

McManus et al. (2008) use this definition to identify three dimensions of organisational resilience; situation awareness, management of keystone vulnerabilities, and adaptive capacity. Situation awareness describes an organisation’s understanding of its business landscape, its awareness of what is happening around it, and what that information means for the organisation now and in the future (Endsley, et al., 2003). Management of keystone vulnerabilities describes the identification, proactive management, and treatment of vulnerabilities that if realised, would threaten the organisation’s ability to survive. This includes emergency and disaster management, and business continuity, and covers many of the traditional crisis planning activities. Adaptive capacity describes an organisation’s ability to constantly and continuously evolve to match or exceed the needs of its operating environment before those needs become critical (Hamel & Välikangas, 2003). In their discussion of the definition of organisational resilience, McManus et al. (2007) use the results of their qualitative study to identify fifteen indicators, five for each of the dimensions. These indicators and dimensions were reviewed as part of this research and one further dimension ‘resilience ethos’, as well as a further eight indicators, were added to the model for evaluation; these can be seen in Table 1. The shaded areas in Table 1 show the resilience ethos dimension and the eight indicators that were added to the original model to form the basis of the resilience measurement tool. Resilience ethos describes a culture where top management is committed to balancing profit-driven pressures such as efficiency with the need to be resilient (Wreathall, 2006). This culture represents “…a willingness to share and refresh knowledge and constant readiness to take community action” (Granatt & Paré-Chamontin, 2006, p. 53).

Table 1: Updated Dimensions and Indicators of Organisational Resilience (Adapted from McManus, et al., 2007, p. 20)

A. Resilience Ethos

Code |

Definition |

||||

|---|---|---|---|---|---|

RE1 |

Commitment to Resilience |

||||

RE2 |

Network Perspective |

||||

B. Organisational Resilience Factors

Situation Awareness |

Management of Keystone Vulnerabilities |

Adaptive Capacity |

|||

|---|---|---|---|---|---|

Code |

Definition |

Code |

Definition |

Code |

Definition |

SA1 |

Roles & Responsibilities |

KV1 |

Planning Strategies |

AC1 |

Silo Mentality |

SA2 |

Understanding & Analysis of Hazards & Consequences |

KV2 |

Participation in Exercises |

AC2 |

Communications & Relationships |

SA3 |

Connectivity Awareness |

KV3 |

Capability & Capacity of Internal Resources |

AC3 |

Strategic Vision & Outcome Expectancy |

SA4 |

Insurance Awareness |

KV4 |

Capability & Capacity of External Resources |

AC4 |

Information & Knowledge |

SA5 |

Recovery Priorities |

KV5 |

Organisational Connectivity |

AC5 |

Leadership, Management & Governance Structures |

SA6 |

Internal & External Situation Monitoring & Reporting |

KV6 |

Robust Processes for Identifying & Analysing Vulnerabilities |

AC6 |

Innovation & Creativity |

SA7 |

Informed Decision Making |

KV7 |

Staff Engagement & Involvement |

AC7 |

Devolved & Responsive Decision Making |

The resilience measurement tool was developed as a web-based survey which uses the perception of staff members to measure the resilience of organisations. A cross section of staff from throughout the organisation were asked to take part in the survey to maximise the representativeness of the evaluation. In addition, one senior manager from each organisation completed a version of the survey that included additional questions relating to business performance.

In total, the survey contains 92 questions and takes between 20-30 minutes to complete. Each indicator is assessed using 3 or more questions which are averaged to form the score for that indicator. The majority of questions asked participants to gauge their agreement with a statement e.g. ‘Most people in our organisation have a clear picture of what their role would be in a crisis’. This was done on a four-point scale ranging from ‘strongly agree’ to ‘strongly disagree’: a ‘don’t know’ option was also provided. The data provided by staff was then averaged to provide a submission on behalf of the organisation.

In total 249 individuals representing 68 organisations from a cross-section of industry sectors, took part in the study. Organisations varied in size from 1 to 210 staff members and participation within organisations ranged from 1-100%. Table 2 shows the range of scores achieved by organisations in Auckland, how many organisations scored within each score boundary for each of the dimensions of organisational resilience, and the range of scores that they achieved.

Table 2: Number of Organisations Scoring Within Each Score Boundary for the Dimensions and Overall Organisational Resilience

Number of Organisations – Dimensions of Organisational Resilience |

|||||

|---|---|---|---|---|---|

Benchmark Resilience Score Boundary |

Resilience Ethos |

Situation Awareness |

Management of Keystone Vulnerabilities |

Adaptive Capacity |

Overall Resilience |

88-100% |

7 |

1 |

0 |

2 |

0 |

79-87% |

21 |

10 |

0 |

11 |

6 |

60-78% |

34 |

50 |

42 |

48 |

51 |

51-59% |

4 |

6 |

17 |

6 |

5 |

42-50% |

1 |

1 |

8 |

0 |

2 |

0-41% |

1 |

0 |

1 |

1 |

0 |

Low-High Scores |

33-92% |

49-88% |

33-77% |

40-95% |

44-83% |

Note: The bottom row shows the lowest and highest scores for each dimension and the range is shown in brackets.

For each of the four dimensions and overall resilience, the majority of organisations scored between 60-78% achieving a good score. This means that organisations in Auckland generally demonstrated a culture that supports and prioritises resilience and enables an awareness of the organisations’ internal and external environment. Organisations generally have a good ability to adapt to their environment and use their situation awareness to inform and manage their planning efforts.

The size of the range of the scores for each dimension provides evidence that organisations differ in their strengths and weaknesses even though they may achieve similar overall resilience scores. Of those 68 organisations that achieved a good score (60-78%) for their overall resilience, 49 scored poorly or very poorly (0-50%) for at least one indicator. This shows that even organisations who achieve a good overall score are still likely to be able to improve significantly.

Table 3 shows average scores for each of the four dimensions of organisational resilience by industry sector, as well as the average overall resilience. The highest average score achieved for any one industry was the Government, Defence and Administration sector which averaged 92% for its resilience ethos and also averaged the highest overall resilience score (78%). For this sector, a breakdown of their resilience strengths and weaknesses would enable them to see which indicators are driving their high scores. These strengths could then be monitored to ensure that their high scores are maintained over time. An example of this kind of analysis is included in the discussion of the individual organisation below.

The lowest average score achieved for any one industry was the Agriculture, Forestry and Fishing sector which averaged 49% for its management of keystone vulnerabilities. This stems from a particular weakness in its planning strategies, including a lack of formal planning and a poor awareness of planning arrangements among staff. Again this information comes from analysing sector scores for individual indicators and could provide industry groups, regulators and government groups with direction on the information or resources that might help an industry as a whole to improve its resilience.

The Communications sector achieved the highest average for the adaptive capacity dimension. This stems from a score of 89% in the strategic vision and outcome expectancy indicator; they were one of only two sectors to score ‘excellent’ on any one indicator. The strategic vision and outcome expectancy indicator is designed to measure whether the organisation has a defined strategic vision and whether that vision is understood and shared across the organisation. Questions relating to this indicator focus on whether or not the organisation has a formalised strategic vision, whether or not staff recognise that vision as reflecting the values that they aspire to, and whether their vision is continuously re-evaluated as their organisation changes.

Table 3: Average Scores for Each of the Four Dimensions of Organisational Resilience by Industry Sector

Industry Sector |

Resilience Ethos |

Situation Awareness |

Management of Keystone Vulnerabilities |

Adaptive Capacity |

Overall Resilience |

|---|---|---|---|---|---|

Agriculture, Forestry and Fishing |

75% |

65% |

49% |

65% |

64% |

Communication |

80% |

76% |

61% |

78% |

74% |

Construction |

58% |

66% |

58% |

76% |

65% |

Cultural and Recreational Services |

77% |

63% |

60% |

77% |

69% |

Education |

75% |

64% |

59% |

70% |

67% |

Finance and Insurance |

67% |

69% |

60% |

62% |

65% |

Government Defence and Administration |

92% |

72% |

74% |

75% |

78% |

Health and Community |

86% |

75% |

69% |

77% |

77% |

Manufacturing |

71% |

69% |

57% |

68% |

66% |

Personal and Other Services |

75% |

70% |

64% |

70% |

70% |

Property and Business Services |

75% |

71% |

61% |

71% |

70% |

Retail Trade |

85% |

71% |

58% |

70% |

71% |

Wholesale Trade |

71% |

66% |

57% |

71% |

66% |

All Sectors |

74% |

68% |

59% |

71% |

69% |

Note: These figures relate to the model of organisational resilience proposed through this paper which may alter following subsequent analysis of the resilience indicators. Data shown represents averaged scores and so cannot be interpreted using the score boundaries shown in Table 2.

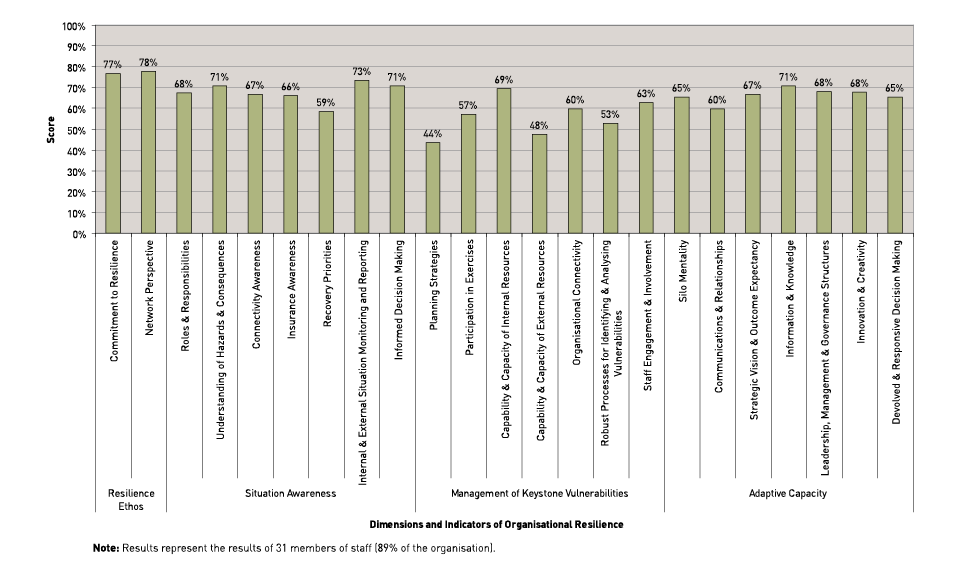

Using the resilience measurement tool, individual organisations can see how their resilience compares to other organisations, and how their departments or business units, sites or locations, compare with each other. This then provides important information for resourcing, staff allocation, corporate processes, knowledge management and organisational culture. Each organisation received a results report detailing their resilience strengths and weaknesses. As an example, Graph 1 shows an organisation’s scores (strengths and weaknesses) for each of the indicators of organisational resilience.

This organisation’s resilience strengths include its commitment to resilience (77%) and network perspective (78%) as well as its internal and external situation monitoring and reporting (73%) (as shown in Graph 1). This means that the organisation has a culture that supports and prioritises resilience and that it has processes in place for monitoring changes and trends in its environment over time. These changes and trends could include regulatory changes, increasing or slowing demand for products or services, social changes, technological development etc. Knowledge of these conditions before they contribute to a crisis for the organisation could significantly increase the organisations’ resilience. Alternatively this knowledge could also be translated into competitive advantage and opportunity.

Graph 1. An example of organisations scores for the indicators of organisational resilience

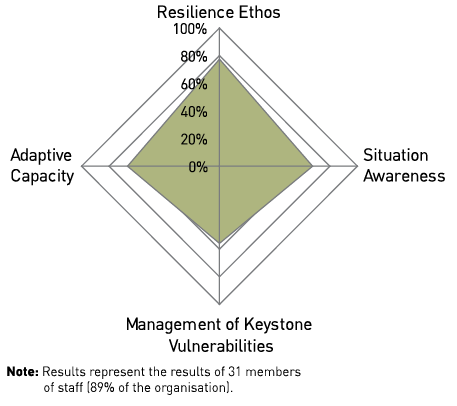

Grouping the indicators, as shown in Table 3, this organisation’s strongest dimension is its resilience ethos and its weakest is its management of keystone vulnerabilities: this is summarised in Figure 1. Based on the definitions of these dimensions discussed earlier, to improve this organisation should focus on formalising, sharing and exercising their plans and arrangements, as well as leveraging off of their current strengths in a crisis.

Figure 1. Example of an Organisation’s Scores for each of the Indicators of Organisational Resilience

Organisations can also use this level of analysis to examine how their resilience fluctuates across hierarchical levels in their organisation. An example of this is how many staff within participating organisations were not aware that their organisation had an emergency plan; interestingly some senior managers were not aware of existing plans either. This is evidence of silo mentality within organisations where emergency plans and arrangements are developed within a department or by a specific individual in isolation, within a silo, and plans are not widely shared or communicated. This silo mentality in particular, contributed to lower scores for the planning strategy indicator for most of the organisations that took part.

For organisations to invest in resilience there must be an evidenced way of measuring it, and of demonstrating changes and trends in this measurement over time. This will then enable organisations to make a business case for resilience and to show the value added by resilience management programs.

Overall the Auckland organisations taking part in this study have a good level of resilience. Common strengths include a good resilience ethos and a high level of adaptive capacity; however the distribution of these strengths varies across industry sectors. Common weaknesses include organisations’ ability to utilise resources from outside of their organisation during a crisis. The high level of interconnectivity and interdependency between organisations makes this a critical indicator that organisations and industry groups should continue to monitor.

The resilience measurement tool also enables analysis of organisational resilience by industry sector. Industry groups, regulators, and local and regional government groups may find this information useful in understanding training and education needs, the most common resilience challenges, and how they can help organisations to address these.

Analysis of organisational resilience by industry sector is also important for individual organisations. Organisations can identify whether they are more or less resilient than other similar organisations and can also identify the resilience strengths which stand them apart from others. These strengths can then be translated into competitive advantage during and after industry wide crises or negative trends such as rising costs of raw materials, agricultural disease outbreaks, or product recalls. Individual organisations can also use the tool to examine their resilience internally, allowing them to address gaps in awareness and silos between offices, departments and business units, or organisational functions.

The limitations of the tool at this time are that it is still in its early stages of development and that it requires a high level of staff participation to create accurate results for individual organisations. This in itself though is not a bad thing as staff participation will increase awareness and generate discussions around resilience. The next steps in developing this tool are to complete further tests including organisations in other areas of New Zealand and in other countries.

Dervitsiotis, K. N. (2003). The Pursuit of Sustainable Business Excellence: Guiding transformation for effective organisational change. Total Quality Management, 14(3), 251-267.

Endsley, M. R., Bolte, B., & Jones, D. G. (2003). Designing for Situation Awareness An Approach to User-Centred Design. London: Taylor & Francis.

Granatt, M., & Paré-Chamontin, A. (2006). Cooperative Structures and Critical Functions to Deliver Resilience Within Network Society. International Journal of Emergency Management, 3(1), 52-57.

Hamel, G., & Välikangas, L. (2003). The Quest for Resilience. Harvard Business Review, 81(9), 52-63.

McManus, S., Seville, E., Brunsdon, D., & Vargo, J. (2007). Resilience Management: A Framework for Assessing and Improving the Resilience of Organisations (No. 2007/01): Resilient Organisations.

McManus, S., Seville, E., Vargo, J., & Brunsdon, D. (2008). A Facilitated Process for Improving Organizational Resilience. Natural Hazards Review, 9(2), 81-90.

Mitroff, I. I., Pauchant, T. C., Finney, M., & Pearson, C. (1989). Do (Some) Organisations Cause their Own Crises? The Cultural Profiles of Crisis-prone vs. Crisis-prepared Organisations. Industrial Crisis Quarterly, 3(4), 269-283.

Paton, D., & Johnston, D. (2006). Identifying the Characteristics of a Resilient Society. In D. Paton & D. Johnston (Eds.), Disaster Resilience: An integrated approach: Charles C Thomas Publisher Ltd.

Seville, E., Brunsdon, D., Dantas, A., Le Masurier, J., Wilkinson, S., & Vargo, J. (2008). Organisational Resilience: Researching the Reality of New Zealand Organisations. Journal of Business Continuity and Emergency Management, 2(2), 258-266.

Smith, D. (1990). Beyond Contingency Planning: Towards a model of crisis management. Industrial Crisis Quarterly, 4(4), 263-275.

Somers, S. (2007). Building Organisational Resilience Potential: An Adaptive Strategy for Operational Continuity in Crisis. Unpublished Doctoral, Arizona State University, Arizona.

Webb, G. R., Tierney, K., & Dahlhamer, J. M. (1999). Businesses and Disasters: Empirical Patterns and Unanswered Questions (No. Preliminary Papers;281). Delaware: Disaster Research Center.

Weick, K. E., & Sutcliffe, K. M. (2007). Managing the Unexpected Resilient Performance in an Age of Uncertainty (2nd ed.). San Francisco, CA: Jossey-Bass.

Wreathall, J. (2006). Properties of Resilience Organizations: An Initial View. In E. Hollnagel, D. D. Woods & N. Leveson (Eds.), Resilience Engineering: Concepts and Precepts (pp. 275-286). aldershot: Ashgate.

Amy Stephenson is a Ph.D. candidate at the University of Canterbury, New Zealand. Her research focuses on measuring, comparing and benchmarking the resilience of organisations.

John Vargo is a Senior Lecturer in Information Systems, Founder of the e-Commerce programme and Co-Founder of the eSecurity Lab at the University of Canterbury, New Zealand. His interests focus on the conjunction of business practice and technology application with an emphasis on building resilience in the face of systemic insecurity.

Erica Seville is a Research Fellow in the Civil and Natural Resources Engineering Department at the University of Canterbury, New Zealand, and leads the Resilient Organisations Research Programme. Her research interests focus on building effective capability in risk management and resilience.